AF 447 Thread No. 7

Join Date: Jan 2005

Location: W of 30W

Posts: 1,916

Likes: 0

Received 0 Likes

on

0 Posts

Originally Posted by Diagnostic

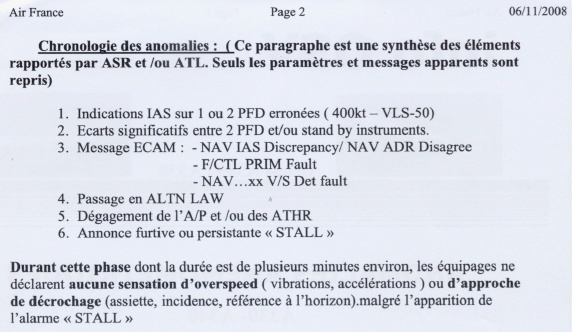

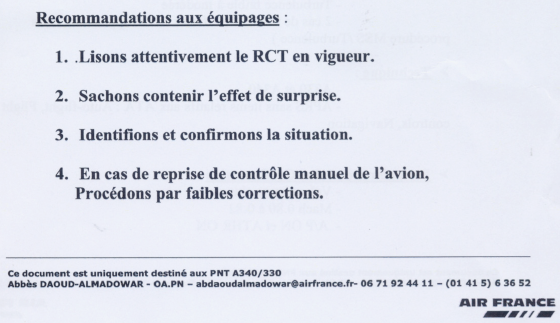

Therefore my hypothesis is that the stall warning was being deliberately ignored as they (especially PF) thought it was a malfunction, as part of the same instrumentation problem which was affecting the IAS.

The mention STALL warning is made in a way that it does not deserve specific attention and could almost be disregarded (my own interpretation of the memo)

6. Spurious or persistent STALL warning

When you read the memo, it does seem normal to have to deal with a continuous STALL warning without taking any necessary action ...

What to say about the recommandations :

2. Do not panic - Be in control.

At default to specifically train in the simulator, that memo should have stated the necessary steps :

- PF Maintain 2.5 degrees of pitch

- PNF Set the thrust parameters as they are usually in CRZ

- Wait for improvement

Join Date: Feb 2011

Location: Nearby SBBR and SDAM

Posts: 875

Likes: 0

Received 0 Likes

on

0 Posts

Hi,

Old Carthusian, If i may respectfully ask and comment on some points:

Everything?

How you can stay so sure on that? RHS was not even recorded. This information may be lost forever. Like the not measured AS during "sensors outage".

Well, this crash is history. What about this kind of warning in other flights in similar situations?

If just training could be sufficient why they decided to create an HF study group? The crew was affected just by "lack or inadequate training"? Other crews (with training) would be immune to similar issues? And if "multiple faults" happens (or are showed) simultaneously? The big bird (388) climbing out of Changi had multiple " simultaneous faults" due electrical cabling (harness) damage ( as a result) of the uncontained engine failure of eng #2.

We actually don't know why (if ever will). We have all factual info?

What other reasons you can suggest? Or could mention, please.

As a designer i would like to benefit from: "training should enable with the unexpected" We never know what to expect. This is virtually impossible. You may anticipate just a subset. Creativity in some cases "makes the difference". I am not against SOP, etc.

With a crew that (seems) failed to identify a "simple" UAS and subsequently stalled the jet? The SIM made and summarized by TD shows how difficult would be the "task".

HF study was motivated by this (and other facts)

Certainly.

We are anxious to read the final report on this.

Lack of (training) at cruise FL

Old Carthusian, If i may respectfully ask and comment on some points:

Everything keeps on coming back to training, SOPs and CRM.

(and remember the only instrument that was not reliable was the Airspeed Indicator)

It is not that I am against adding such a warning but that the warning would not necessarily have made a difference to their response.

training should enable you to deal with the unexpected.

There was no attempt to use the SOPs or to diagnose the problem.

This is more indicative of flight crew problems

training should enable you to deal with the unexpected.

Even the stall was recoverable

The warning didn't help.

Unfortunately this is a crew caused accident

with very little help from the machine

It touches on pilot training and airline culture and how these are carried out with automation.

Last edited by RR_NDB; 7th Apr 2012 at 04:55.

Join Date: Feb 2011

Location: Nearby SBBR and SDAM

Posts: 875

Likes: 0

Received 0 Likes

on

0 Posts

Very important Inputs to the crew (received at training)

Hi,

CONF iture:

Another relevant '"input" that could partially explain PF, PM and Captain attitudes.

Their (crew) "processors output" very probably will be understood. The output was not random. There were clear bias. The "input" of this memo to them could have biased their actions since the beginning. And during a significant part of the stall. Even during the zoom climb.

Also perhaps affecting the "model" PF made and used in doing "persistent NU".

"Sachon contenir l'effet de suprise"

CONF iture:

Another relevant '"input" that could partially explain PF, PM and Captain attitudes.

Their (crew) "processors output" very probably will be understood. The output was not random. There were clear bias. The "input" of this memo to them could have biased their actions since the beginning. And during a significant part of the stall. Even during the zoom climb.

Also perhaps affecting the "model" PF made and used in doing "persistent NU".

At default to specifically train in the simulator, that memo should have stated the necessary steps :

"Sachon contenir l'effet de suprise"

Last edited by RR_NDB; 8th Apr 2012 at 05:44.

Join Date: Jul 2009

Location: The land of the Rising Sun

Posts: 187

Likes: 0

Received 0 Likes

on

0 Posts

Diagnostic

If I may I will focus on the training aspect of your posts. This does not mean that I disregard the CRM and SOP issues. The question would be is the new warning necessarily going to improve safety or is is going to lessen it? Adding a warning is not necessarily a good thing. With regard to training - hard training, easy execution. One needs to practice unusual situations frequently so that one knows how to react in an unusual situation. Training enables one to deal with the situation more effectively - by relying on your training you are better prepared. It is nice if you can train for the specific situation but even so as others have so eloquently put it in other threads the pause, evaluate, act triad works wonders in such situations.

I can suggest several explanations of why the crew acted the way they did - all of which would fit the facts. If you wish I will sketch a couple of scenarios out in a later post but at the moment I would rather not speculate beyond the evidence we have. This accident also links in with Air France pilot culture and I believe other airlines who have developed a 'corrupted' pilot culture. The existance of a warning does not necessarily lead to increased safety - better training does.

If I may I will focus on the training aspect of your posts. This does not mean that I disregard the CRM and SOP issues. The question would be is the new warning necessarily going to improve safety or is is going to lessen it? Adding a warning is not necessarily a good thing. With regard to training - hard training, easy execution. One needs to practice unusual situations frequently so that one knows how to react in an unusual situation. Training enables one to deal with the situation more effectively - by relying on your training you are better prepared. It is nice if you can train for the specific situation but even so as others have so eloquently put it in other threads the pause, evaluate, act triad works wonders in such situations.

I can suggest several explanations of why the crew acted the way they did - all of which would fit the facts. If you wish I will sketch a couple of scenarios out in a later post but at the moment I would rather not speculate beyond the evidence we have. This accident also links in with Air France pilot culture and I believe other airlines who have developed a 'corrupted' pilot culture. The existance of a warning does not necessarily lead to increased safety - better training does.

Allow me to comment to the issues, adressed by OC at Diagnostic.

The crew was surprised, not by the UAS event (which was not identified yet), but by the drop out of the AP and the need to fly manual. That sudden manual flying chewed up most of the crews attention and was hindering in identifying the cause of the problem (UAS). This cause could have been multifold including the WX situation/ turbulence. The minimal hand flying skills led to the zoom up.

It could have made a difference from the beginning. Knowing, that the problem of AP drop out is UAS, or even knowing it before the AP drops out and manual flying is imminent, saves time in analyzing the situation and helps initiating the necessary steps. The zoom climb would have not been part of the procedure, as often mentioned before.

Because the initial incident was not fully identified. The sytem knew that the speed became unreliable, but the indication to the crew was the the handover to manual flying in some expected turbulent WX situation without communicating the known information (UAS) of the reason for the handover.

I go with diagnostic, most of the other mentioned UAS events had not been handeled as they should have been, and in most of those cases the identification of the UAS was marginal, late, or not existent. There is lots of room for improvement, not only on the training issue.

IMHO MIL crews are trained to work around the unexpected event / problem, as they can´t plan a military mission and the asociated tasks in great detail, and if they can do it, it anyway comes different to the planning in the end due to a multitude of possible factors including bad guys trying to shoot holes in your plane.

But air transport crews are best trained in handling standard and non standard situations acording to SOP´s and CRM. To implement those procedures the identification of the problem has to be quick and simple and is a prerequisit to implement the correct procedure. The ECAM is the best example for that need.

Even when PNF mentioned "..we have lost speeds..." , we cant be sure, that it dawned onto them that the AP-disconnect had anything to do with the missing speed indication and was initally just a simple UAS situation. Because it was neither acknowledged by the PF nor was the necessary procedure mentioned.

The first necessary step " maintain aircraft control" was already hindered by the unknown cause of the problem and the lacking manual handling skills. Would the cause UAS have been known from the beginning, his handling might have been simplified as PJ2 sstates by doing nothing. But instead they failed in " maintaining aircraft control" , did never "analyze the situation" and could therefore not "take proper action".

It comes down to a misidentification of a problem (UAS), which could have been communicated to the crew by a clearer and more expedite way.

OC

Supposing the crew were surprised then training should kick in. A pause, a scan of the instrument panel (and remember the only instrument that was not reliable was the Airspeed Indicator).

Supposing the crew were surprised then training should kick in. A pause, a scan of the instrument panel (and remember the only instrument that was not reliable was the Airspeed Indicator).

OC

PJ2 also pointed this out - this was not a serious incident at first. However, the crew actions made it into a serious incident. It also seems that the PFs scan broke down almost immediately and that the PNF did not intervene sufficiently. So a UAS warning might not have made any difference.

PJ2 also pointed this out - this was not a serious incident at first. However, the crew actions made it into a serious incident. It also seems that the PFs scan broke down almost immediately and that the PNF did not intervene sufficiently. So a UAS warning might not have made any difference.

OC

The cockpit voice transcript indicates a very rapid 'over reaction' to the initial incident. Once again this is not indicative of an interface issue. There was no attempt to use the SOPs or to diagnose the problem.

The cockpit voice transcript indicates a very rapid 'over reaction' to the initial incident. Once again this is not indicative of an interface issue. There was no attempt to use the SOPs or to diagnose the problem.

I go with diagnostic, most of the other mentioned UAS events had not been handeled as they should have been, and in most of those cases the identification of the UAS was marginal, late, or not existent. There is lots of room for improvement, not only on the training issue.

IMHO MIL crews are trained to work around the unexpected event / problem, as they can´t plan a military mission and the asociated tasks in great detail, and if they can do it, it anyway comes different to the planning in the end due to a multitude of possible factors including bad guys trying to shoot holes in your plane.

But air transport crews are best trained in handling standard and non standard situations acording to SOP´s and CRM. To implement those procedures the identification of the problem has to be quick and simple and is a prerequisit to implement the correct procedure. The ECAM is the best example for that need.

Even when PNF mentioned "..we have lost speeds..." , we cant be sure, that it dawned onto them that the AP-disconnect had anything to do with the missing speed indication and was initally just a simple UAS situation. Because it was neither acknowledged by the PF nor was the necessary procedure mentioned.

The first necessary step " maintain aircraft control" was already hindered by the unknown cause of the problem and the lacking manual handling skills. Would the cause UAS have been known from the beginning, his handling might have been simplified as PJ2 sstates by doing nothing. But instead they failed in " maintaining aircraft control" , did never "analyze the situation" and could therefore not "take proper action".

It comes down to a misidentification of a problem (UAS), which could have been communicated to the crew by a clearer and more expedite way.

Originally Posted by gums

Lost a friend at Cali back in 95 or 96 or...... Stoopid flight management system turned the jet the wrong way and they noticed the error but kept descending whle turing back to the approach fix. Not good.

So goes the discussion about AF447 too. We let our imagination run wild and invent evil computers, megastorms, complete instruments failures etc just to turn our mind away of the picture that scares us because we can easily imagine our portrait in it: the pilot who forgets how to fly in the midair. For what is so far known, the final crew of the F-GZCP might have been one of the best on the fleet, or one of the worst or anywhere in between, the preliminary reports don't say a lot but few things are certain: 1) they have been deemed competent to perform the flight by their superiors and training dept 2) they have lost the idea of what is happening, what they should do and what is the aeroplane capable of. Saying they were incompetent (or worse) is just another nervous let's-get-over-this-quick proposition and just as its twin of let's-speculate-about-technology-we-don't-understand is the manifestation of the fear of grappling with the real issues risen (again) by the spectre of AF447.

At our level of technology, we can't have ECAM/EICAS procedure or signal light, or voice warning or whatever that would warn the crew of unreliable airspeed. It is not as simple as plain failures, speed signal and indication are there but it takes intelligence to compare them with known weight, thrust level and attitude of the aeroplane to decide which indication is realistic and which is not. No matter how much capabilities of our computers are increased they are still computers, they are capable of much faster operation but are not a mil closer to true intelligence then first pocket calculators. Now please, do prove me wrong. Not by infantile "Dear aeroplane systems designers, I don't know the principles behind it but I demand it should work in such-and-such manner" but rather go on about designing the device that will work as you propose. I'm serious here. One well designed system will do more good than a million complaints about badly designed ones.

Unreliable airspeed is basically loss of airspeed procedure, aggravated on the modern airliner by the false alerts, thrown up by computers unable to overcome their IF...THEN logic. PJ2 mentioned his experience on B767 which mirrors experience of Aeroperu 603 crew - with all static ports blocked there could be no valid measurement of airspeed and altitude and crew was bombarded by false failure messages such as "MACH TRIM" and "RUDDER RATIO". At one point they got stall and overspeed warnings going simultaneously. Yet they kept the aeroplane flying and only lost their battle when they failed to realize ATC was giving altitude from their C-mode altitude and not from elevation primary radar. Anyway, there were ASI failures since there were first ASIs and drill is the same on every fixed wing, be it Rans or A380: pitch+power=performance. Despite the exaltations of some, there is nothing indicating that either attitude or power information was lost at any time. It just wasn't utilized.

Proposed unusual attitude training will do exactly nothing towards eradicating AF447-type accidents. Issue was not botched recovery from high AoA, issue is recovery was not even attempted as stall was not recognized. Even more important issue is that aeroplane was actively pulled into stall, a factor missing at every other incident listed in interim2 that makes clear some crews managed to botch-up and get the stall warning. However, all of them pushed when warned, some loosing a chunk of altitude in the process.

Join Date: Jul 2009

Location: France - mostly

Age: 84

Posts: 1,682

Likes: 0

Received 0 Likes

on

0 Posts

Originally Posted by Diagnostic

"This is a UAS situation, all my pitot probe pressures are different so I have to disconnect the AP - recommend you fly pitch & power which for this alt is X/Y"

Thirty knots drop of IAS in one second is not sufficient to identify UAS. It is not inconceivable that it would occur as a real change of airspeed in a windshear/downburst close to the ground or in the vicinity of the jetstream at altitude. Considering the multitude of possible causes and flight conditions, IMHO the computers cannot reliably identify UAS, but must leave the diagnosis of the problem to intelligent humans.

P.S. Sorry Clandestino, cross-posted

Last edited by HazelNuts39; 7th Apr 2012 at 11:40. Reason: P.S.

Join Date: Feb 2011

Location: Nearby SBBR and SDAM

Posts: 875

Likes: 0

Received 0 Likes

on

0 Posts

Time saver

Hi,

RetiredF4:

Saves time in analyzing the situation and helps initiating the necessary steps. Reducing (or even eliminating) IMHO important "uncertainty". Surprises can bring problems.

Murphy's Law "rounds the picture".

"Stressing" Human Factors

Currently the System has the information (GIGO "feature"). This is an "insider information". Not immediately given to the crew. And could be. The Airbus paper mentions the pilots NEED to do the scan to identify the issue. IMHO this can be improved:

I think so (in this "UAS aspect").

Useful comparison.

might!

It comes down to a misidentification of a problem (UAS), which could have been communicated to the crew by a clearer and more expedite way

could

by a clearer and more expedite way

K.I.S.S. principle IMHO is MANDATORY for the "interface"

Specially in "difficult conditions" (WX, IMC, etc.) in order to stay more distant to the "FUBAR threshold"

RetiredF4:

Saves time in analyzing the situation and helps initiating the necessary steps. Reducing (or even eliminating) IMHO important "uncertainty". Surprises can bring problems.

Murphy's Law "rounds the picture".

That sudden manual flying chewed up most of the crews attention and was hindering in identifying the cause of the problem (UAS). This cause could have been multifold including the WX situation/ turbulence.

The system knew that the speed became unreliable, but the indication to the crew was the the handover to manual flying in some expected turbulent WX situation without communicating the known information (UAS) of the reason for the handover.

There is lots of room for improvement, not only on the training issue.

IMHO MIL crews are trained to work around the unexpected event / problem, as they can´t plan a military mission and the asociated tasks in great detail, and if they can do it, it anyway comes different to the planning in the end due to a multitude of possible factors including bad guys trying to shoot holes in your plane.

But air transport crews are best trained in handling standard and non standard situations acording to SOP´s and CRM. To implement those procedures the identification of the problem has to be quick and simple and is a prerequisit to implement the correct procedure. The ECAM is the best example for that need.

But air transport crews are best trained in handling standard and non standard situations acording to SOP´s and CRM. To implement those procedures the identification of the problem has to be quick and simple and is a prerequisit to implement the correct procedure. The ECAM is the best example for that need.

The first necessary step " maintain aircraft control" was already hindered by the unknown cause of the problem and the lacking manual handling skills. Would the cause UAS have been known from the beginning, his handling might have been simplified as PJ2 sstates by doing nothing. But instead they failed in " maintaining aircraft control" , did never "analyze the situation" and could therefore not "take proper action".

It comes down to a misidentification of a problem (UAS), which could have been communicated to the crew by a clearer and more expedite way

could

by a clearer and more expedite way

K.I.S.S. principle IMHO is MANDATORY for the "interface"

Specially in "difficult conditions" (WX, IMC, etc.) in order to stay more distant to the "FUBAR threshold"

Last edited by RR_NDB; 8th Apr 2012 at 13:56.

Join Date: Jan 2005

Location: W of 30W

Posts: 1,916

Likes: 0

Received 0 Likes

on

0 Posts

Originally Posted by HN39

The trigger was that the system detected a sudden drop in one of the three airspeed values.

...

Thirty knots drop of IAS in one second is not sufficient to identify UAS.

...

Thirty knots drop of IAS in one second is not sufficient to identify UAS.

Much of the current debate is becoming wound-up by hindsight (the spinning hamster wheel). Often in such cases there is inadvertent drift is towards ‘blame and train’, or attempting to fix the problems of a specific accident and thus overlooking generic issues.

Whilst a different form of computation may have prevented this accident, it is unlikely that the industry will think of all possible situations, and even judge some as too extreme to consider – problems of human judgement, cost effectiveness, ‘unforeseeable’ scenarios.

Similarly a different pitot design could have prevented the situation developing, but this action was in hand. In hindsight, the need for at least one modified pitot (and associated crew action) indicates poor judgement in the use of previous data (no blame intended – just a human condition), yet this was perhaps tempered by practicality (ETTO).

Furthermore, knowledge of the icing conditions from research and previous engine problems could have required a temporary restriction in flying in or close to such conditions.

The generic issues here are the failures to learn from previous and often unrelated events, and in judging the risks associated with the identified threats – current state of knowledge or application of knowledge.

None of the above involves the crew; the objective is to protect the sharp end from the ambiguities of rare or novel situations such that their inherent human weaknesses are not strained by time critical situations.

Where crews do encounter these rare situations, then the limited human ability is an asset (human as hazard or human as hero). Protection should not, and often cannot, be achieved by more and more SOPs. Human performance will vary according to experience, knowledge, and capability. We cannot expect the detection and assessment of rare situations to be consistently good, we hope that the assessments and actions are sufficient, and thus safe, but in balance with those ‘miraculous saves’ celebrated by the industry, we have to suffer a few weak performances as part of the norm (again no blame intended) we are not all the same.

Many aspects of the high-level generic view are summarised by J. Reason – “you can’t always change the human, but you can change the conditions in which they work”. However this view should not be restricted to the immediate human-system interface, there are many more facets to the SHEL model of HF.

Another view from the same author is that ‘We Still Need Exceptional People’. This requires the need for continuous learning at all levels in the industry, not just more crew training but real learning in design, regulation, operations, crew, and accident investigation.

This accident, situation, and activities before during and after the event, represents a rare and novel situation, even perhaps ‘unforeseeable’, but from each there are aspects which we must learn. But how can we ensure that we learn the ‘right’ lessons?

Whilst a different form of computation may have prevented this accident, it is unlikely that the industry will think of all possible situations, and even judge some as too extreme to consider – problems of human judgement, cost effectiveness, ‘unforeseeable’ scenarios.

Similarly a different pitot design could have prevented the situation developing, but this action was in hand. In hindsight, the need for at least one modified pitot (and associated crew action) indicates poor judgement in the use of previous data (no blame intended – just a human condition), yet this was perhaps tempered by practicality (ETTO).

Furthermore, knowledge of the icing conditions from research and previous engine problems could have required a temporary restriction in flying in or close to such conditions.

The generic issues here are the failures to learn from previous and often unrelated events, and in judging the risks associated with the identified threats – current state of knowledge or application of knowledge.

None of the above involves the crew; the objective is to protect the sharp end from the ambiguities of rare or novel situations such that their inherent human weaknesses are not strained by time critical situations.

Where crews do encounter these rare situations, then the limited human ability is an asset (human as hazard or human as hero). Protection should not, and often cannot, be achieved by more and more SOPs. Human performance will vary according to experience, knowledge, and capability. We cannot expect the detection and assessment of rare situations to be consistently good, we hope that the assessments and actions are sufficient, and thus safe, but in balance with those ‘miraculous saves’ celebrated by the industry, we have to suffer a few weak performances as part of the norm (again no blame intended) we are not all the same.

Many aspects of the high-level generic view are summarised by J. Reason – “you can’t always change the human, but you can change the conditions in which they work”. However this view should not be restricted to the immediate human-system interface, there are many more facets to the SHEL model of HF.

Another view from the same author is that ‘We Still Need Exceptional People’. This requires the need for continuous learning at all levels in the industry, not just more crew training but real learning in design, regulation, operations, crew, and accident investigation.

This accident, situation, and activities before during and after the event, represents a rare and novel situation, even perhaps ‘unforeseeable’, but from each there are aspects which we must learn. But how can we ensure that we learn the ‘right’ lessons?

clandestino

A decade before his lifespan was abruptly terminated on the slopes of El Deluvio, your unfortunate friend won the accolade of USAF instructor of the year (IIRC he was flying Rhino at the time), which makes him far, far, far above average pilot in anyone's book.

A decade before his lifespan was abruptly terminated on the slopes of El Deluvio, your unfortunate friend won the accolade of USAF instructor of the year (IIRC he was flying Rhino at the time), which makes him far, far, far above average pilot in anyone's book.

He started pilot training in 1979, and when he left the airforce 7 years later to become a flight engineer on B727, he had accumulated a total of 1.362 hours.

Clandestino

even the best pilots can underperform occasionally

even the best pilots can underperform occasionally

It´s astonishing how reading thousands of pages on the various AF447 threads could lead to the conclusion, that only this very unfortunate AF447 crew was able to do such mistakes, all others being skygods and unfailable, and therefore thoughts about an improvement of systems, of the machine man interface is not necessary and a waste of time.

Join Date: Feb 2011

Location: Nearby SBBR and SDAM

Posts: 875

Likes: 0

Received 0 Likes

on

0 Posts

Agree! Wise content.

Hi,

safetypee:

But how can we ensure that we learn the ‘right’ lessons?

Being diligent, only "helps". No guarantees.

Thanks for very good links. Will comment asap on your thread.

safetypee:

However this view should not be restricted to the immediate human-system interface,

We Still Need Exceptional People

This accident, situation, and activities before during and after the event, represents a rare and novel situation, even perhaps ‘unforeseeable’, but from each there are aspects which we must learn.

Being diligent, only "helps". No guarantees.

Thanks for very good links. Will comment asap on your thread.

Last edited by RR_NDB; 8th Apr 2012 at 14:48.

Join Date: Feb 2011

Location: Nearby SBBR and SDAM

Posts: 875

Likes: 0

Received 0 Likes

on

0 Posts

Obsolete AS probes "data processing"

Thirty knots drop of IAS in one second is not sufficient to identify UAS.

There are (quite common) techniques to "detect" the "signal" (threshold) "buried" in the noise ("garbage").

Net result (reliability) could be high. And PRIOR to GIGO threshold (protection) of the System. (Truly "non causal")*

* To the crew. I.e. Before Law change.

While the systems were announcing the multitude of consequential warnings, and the uas was appreciated by the crew at some level least, attitude power and altitude were all reading correctly. the crew were perhaps looking for a complex failure of their complex machine where none existed. i wonder if that psychology and how to prevent it causing brain freeze isn't what needs some attention. adding further logic branches to be half remembered doesn't sound compelling.

safetypee;

An excellent, thoughtful and thought-provoking post, thank you and thank you for the links.

In safety work it is not always easy to learn how, where and when to place one's "focus". We can "tune in" varying "causes" according to our models of this and that, and even lend to such models' details a taxonomy which can at once legitimate such approaches and even explain but also carry the potential to limit such approaches unintentionally, a sort of "auto-immune" disease of process, as it were.

Yes, I think so. Interestingly, this describes some of the system characteristics now known to have occurred prior to 9/11, and, as we are slowly learning, prior to the 2008 financial and to a certain extent, social meltdown in the U.S.

franzl;

Tat is a very true statement.

It´s astonishing how reading thousands of pages on the various AF447 threads could lead to the conclusion, that only this very unfortunate AF447 crew was able to do such mistakes, all others being skygods and unfailable, and therefore thoughts about an improvement of systems, of the machine man interface is not necessary and a waste of time.

First, I know from a few "first-hand" experiences in a number of types including the A330 and A340 that there is no such thing as infallibility in the cockpit. I have plenty of colleagues who can say the same thing. Anyone who does high-risk work (doctors/nurses/engineers/pilots) will have things which he/she has done that keep them awake at night. It is testimony to the success of processes in place which provide redundancy and resiliency in mitigating systems that the accident rate is what it is.

Anyone who flies and is contributing to this discussion knows this very same thing but that sense of someone's approach can't always come through in just a post or two.

If comments default to blame or dissing a crew, the contributor hasn't flown long enough, they are living an illusion informed only by ego, or they aren't a pilot.

Rather than agreeing or disagreeing then, I would like to recognize that over the life of eight or so threads on this accident, that while we have some responses which seem to do this they always seem to fade away while those who do this work (flying transports, engineering safety systems, etc) on a regular basis do have and do provide broader perspectives. Most know that "blaming this crew" doesn't cut it and dooms any responses to pedantic repetitions of the notion of "primary causes", leaving us to chase down, focus upon and fix "the cause" while waiting for the next cause. The simplest example is, "the accident occurred while approaching runway 31L so we won't use "runway 31L" anymore. I thought the ETTO presentation was worth examining in this light. And as many have clarified, focusing on the crew is not the same thing as blaming the crew.

In this, the BEA "Human Factors" group have a Herculean task.

An excellent, thoughtful and thought-provoking post, thank you and thank you for the links.

In safety work it is not always easy to learn how, where and when to place one's "focus". We can "tune in" varying "causes" according to our models of this and that, and even lend to such models' details a taxonomy which can at once legitimate such approaches and even explain but also carry the potential to limit such approaches unintentionally, a sort of "auto-immune" disease of process, as it were.

The generic issues here are the failures to learn from previous and often unrelated events, and in judging the risks associated with the identified threats – current state of knowledge or application of knowledge.

franzl;

Quote:

Clandestino

even the best pilots can underperform occasionally

Clandestino

even the best pilots can underperform occasionally

It´s astonishing how reading thousands of pages on the various AF447 threads could lead to the conclusion, that only this very unfortunate AF447 crew was able to do such mistakes, all others being skygods and unfailable, and therefore thoughts about an improvement of systems, of the machine man interface is not necessary and a waste of time.

Anyone who flies and is contributing to this discussion knows this very same thing but that sense of someone's approach can't always come through in just a post or two.

If comments default to blame or dissing a crew, the contributor hasn't flown long enough, they are living an illusion informed only by ego, or they aren't a pilot.

Rather than agreeing or disagreeing then, I would like to recognize that over the life of eight or so threads on this accident, that while we have some responses which seem to do this they always seem to fade away while those who do this work (flying transports, engineering safety systems, etc) on a regular basis do have and do provide broader perspectives. Most know that "blaming this crew" doesn't cut it and dooms any responses to pedantic repetitions of the notion of "primary causes", leaving us to chase down, focus upon and fix "the cause" while waiting for the next cause. The simplest example is, "the accident occurred while approaching runway 31L so we won't use "runway 31L" anymore. I thought the ETTO presentation was worth examining in this light. And as many have clarified, focusing on the crew is not the same thing as blaming the crew.

In this, the BEA "Human Factors" group have a Herculean task.

Last edited by PJ2; 7th Apr 2012 at 18:07.

It comes down to a misidentification of a problem (UAS), which could have been communicated to the crew by a clearer and more expedite way.

We both know that identifying the problem is a fundamental aviation principle. If there is difficulty in identifying the problem, wait. As in many abnormals which occur, there was no emergency, no need to act unilaterally, instantly. The safety of the aircraft was never in question, (that's possibly a hindsight observation). Why was action taken, vice not?, is what it comes down to I think. And this point is germane to the discussion where crews did not immediately identify or respond 100% correctly, because in all events but this one there was no accident. Why?

I do see your point - a warning vice "circumstantial evidence" so to speak, would probably stop action and cause a change in behaviour but then the question becomes, At what point do we stop designing for such things?, and the larger question is, In terms of interventions and attention-getting, what is the balance between pilot and automation? There are within us all, the sources of an accident - do we expect that design and engineering, and then Standards & Training/SOPs/CRM/HF (SHEL), will resolve all these issues? If not, what is acceptable and why?

PJ2

I do see your point - a warning vice "circumstantial evidence" so to speak, would probably stop action and cause a change in behaviour but then the question becomes, At what point do we stop designing for such things?

I do see your point - a warning vice "circumstantial evidence" so to speak, would probably stop action and cause a change in behaviour but then the question becomes, At what point do we stop designing for such things?

The problem lies not in the difficult tasks which have to be fullfilled routinly, but in the onetime ones, which we still leave the pilots to do. But with limited knowledge and no training (knowledge and training developes expierience) even simple tasks can lead to desaster.

Back to the AP: If that one is forced off due to mechanical loads or any not computable malfunction, then be it. But when it is deliberately switched off by the system after thorough evaluation of its inputs in straight and level flight a courtesy warning prior drop off should be not too hard and expensive to design and implement. It´s just that nobody thought about it until now.

franzl;

The dumbing-down of aviation has been going on since the early 80's. Automation in many eyes IS the third pilot and training pilots to push the right buttons and, as TTex600 says in (Post #1292), "Airbus training is focused on the wrong targets when considering aircraft control. One is judged by his/her knowledge of the protections - with little an no emphasis placed on degraded modes.", to which I would add, one is judged largely though not excusively in recurrent training sessions by one's knowledge and operation of the autoflight system when everything is going right, and not by one's ability to skillfully and with knowledge, take over the airplane until the automatics are happy again.

Non-use of the autoflight system in recurrent training and, for the most part, in flight, is discouraged and is taken as a sign that one doesn't know the autoflight system thoroughly enough. The fact that guys refused to disconnect the autothrust when offered (as long ago as 15 years now), was and is a canary-in-the-mine along with other signs as far as I'm concerned and because there are hundreds of ways to fail at doing this but actual failure is minimal, and very few ways to do it all correctly and there are millions of examples that it is done well, warning systems which cater to rare inattention or, less rare, lack of airmanship and a growing surface knowledge of one's profession just kick that can down the road a bit.

I'm taking a rest from this for a while, to think.

Cheers!

And i fear, the new generation of pilots / system managers does not want anything back from the past and will accept any gadget which releaves some workload and some asociated necessary training.

Non-use of the autoflight system in recurrent training and, for the most part, in flight, is discouraged and is taken as a sign that one doesn't know the autoflight system thoroughly enough. The fact that guys refused to disconnect the autothrust when offered (as long ago as 15 years now), was and is a canary-in-the-mine along with other signs as far as I'm concerned and because there are hundreds of ways to fail at doing this but actual failure is minimal, and very few ways to do it all correctly and there are millions of examples that it is done well, warning systems which cater to rare inattention or, less rare, lack of airmanship and a growing surface knowledge of one's profession just kick that can down the road a bit.

I'm taking a rest from this for a while, to think.

Cheers!

Great posts from airmen here, and some others

As a non-heavy pilot, I have really enjoyed the posts here and "meeting" the pilots and engineers. I also appreciate the comraderie and the warm welcome I have received, being a lite pilot and all that.

This quote applies to the heavy pilots as much as to my genre,

"Only the spirit of attack born in a brave heart will bring success to any fighter aircraft no matter how highly developed it may be" - Galland

We initial cadre of the Viper took this to heart, and despite all the "protections", or what we called "limits", imposed by our FBW flight controls, we did just fine. That being said, the jet was so easy to fly that we lost pilots just because of that. We did not have the cosmic auto-throttle or autopilot that the 'bus has. But the jet would still do what the engineers had programmed despite our excessive commands. For example, one test bird measured over a hundred pounds of back stick by the pilot. This was funny, as max command was about 34 pounds. Nevertheless, the jet gave you everything it could without departing from controlled flight. We quickly became "spoiled".

So I question the current mentality of the folks up front on my airliner. Are they simply system monitors or are they pilots?

Do they spend more time in the sim setting up the flight management system routes, waypoints and altitudes and such than considering what they would do when a flock of geese entered both engines shortly after takeoff?

I realize that the sims prolly don't handle "out of the envelope" flight conditions very well. But my experience on a sim flight check was that the instructor threw everything in the book at you. So I imagine that the major carriers are now exposing the crews to the UAS problem and loss of other data that the flight control system uses.

I doubt that the stall recovery techniques we have discussed here will get much attention. Why? Because most here would not have held back stick after the airspeed went south, but we would have simply kept the attitude and power we had when the event occurred. Then figure out what the hell was happening.

Look at this, and imagine what I did... gear up and then....

Well, left stick and the sucker kept flying. Reduce power to stay at the same speed as I knew the thing was still flying at that speed so why be a Chuck Yeager?

The FBW was giving me max left roll except for the extra pound or two of stick command I was applying. So the FBW prevented a serious situation. Not to imply the jet was easily controlled, but we got her back on the ramp in one piece, and I had a stiff drink shortly thereafter.

So how would the current crop of "system monitors" react to something similar? And we did not have over 30 previous incidents - I was the first.

This quote applies to the heavy pilots as much as to my genre,

"Only the spirit of attack born in a brave heart will bring success to any fighter aircraft no matter how highly developed it may be" - Galland

We initial cadre of the Viper took this to heart, and despite all the "protections", or what we called "limits", imposed by our FBW flight controls, we did just fine. That being said, the jet was so easy to fly that we lost pilots just because of that. We did not have the cosmic auto-throttle or autopilot that the 'bus has. But the jet would still do what the engineers had programmed despite our excessive commands. For example, one test bird measured over a hundred pounds of back stick by the pilot. This was funny, as max command was about 34 pounds. Nevertheless, the jet gave you everything it could without departing from controlled flight. We quickly became "spoiled".

So I question the current mentality of the folks up front on my airliner. Are they simply system monitors or are they pilots?

Do they spend more time in the sim setting up the flight management system routes, waypoints and altitudes and such than considering what they would do when a flock of geese entered both engines shortly after takeoff?

I realize that the sims prolly don't handle "out of the envelope" flight conditions very well. But my experience on a sim flight check was that the instructor threw everything in the book at you. So I imagine that the major carriers are now exposing the crews to the UAS problem and loss of other data that the flight control system uses.

I doubt that the stall recovery techniques we have discussed here will get much attention. Why? Because most here would not have held back stick after the airspeed went south, but we would have simply kept the attitude and power we had when the event occurred. Then figure out what the hell was happening.

Look at this, and imagine what I did... gear up and then....

Well, left stick and the sucker kept flying. Reduce power to stay at the same speed as I knew the thing was still flying at that speed so why be a Chuck Yeager?

The FBW was giving me max left roll except for the extra pound or two of stick command I was applying. So the FBW prevented a serious situation. Not to imply the jet was easily controlled, but we got her back on the ramp in one piece, and I had a stiff drink shortly thereafter.

So how would the current crop of "system monitors" react to something similar? And we did not have over 30 previous incidents - I was the first.

Join Date: Aug 2011

Location: Near LHR

Age: 57

Posts: 37

Likes: 0

Received 0 Likes

on

0 Posts

@PJ2,

Thanks for your comments. I note you're taking a break from this, as I will also be doing. I've learned new information from some people, including you, over the past few days, thanks.

As I said (more than once) to O.C., I agree with that - the crew behaviour certainly appears to be the primary cause of this accident (which clearly implicates training etc.).

However, in BEA IR2 was evidence of inappropriate UAS recognition (and therefore incorrect subsequent procedure) by several crews, not just AF447. It therefore seemed sensible to consider what could be done to help crews via improvements in the man/machine interface, especially since I have seen parallels to such "hidden decisions" in large computer systems, and their adverse effects on quick & efficient troubleshooting by humans.

What I have read over the last few days is that such changes are seen as unnecessary by some people, so I'll withdraw from the thread for the time being, but with my personal opinion (as a GA pilot & engineer, and therefore acknowledging that I don't have the experience to know the consequences) still being that improvements here may be a "net win", and hence worth investigating further, at least.

As you said, the HF part of the report will make interesting reading!

@TTex600

Hi,

As with PJ2, your comments about the state of current training and what pilots are being measured on (and hence what training is being driven to produce), are very interesting - and worrying. Unfortunately I also see this management drive to "train to meet tick-boxes" instead of "train to meet actual requirements" being done outside of aviation.

@safetypee,

Hi,

Another "thanks" from me for those links. Much earlier in these threads, I saw mention of the Dr Bainbridge "Ironies of Automation" paper which I read at the time. I'll see how the papers / discussions in your recent links relate to her conclusions. Some of the descriptions in her paper really resonated with me, as being human behaviour which I've seen and/or experienced.

@RR_NDB,

Hi Mac,

Thanks again for your recent posts & analysis. It will be interesting to see if the BEA recommend manufacturers to re-visit the current situation about UAS warnings / recognition.

Indeed - that extra workload on the crew, to figure-out themselves (again) what has already been decided by the avionics, seems unnecessary & unhelpful.

@CONF iture,

Hi,

Thanks for highlighting this - very interesting. I hadn't recognised the correlation to my thoughts about the PF's treatment of the still warning (i.e. deliberately ignoring it), with this document saying that the crew could sometimes get a stall warning with, as you say, no instructions to respect it using SOP. It's a shame that the English version of IR3 pages 63 & 64 which mention this document, didn't translate it.

This leads onto the concern that, if they ignore a stall warning for long enough, then it may no longer still be an approach to stall warning, but may now be a we're stalled warning!

Again, it'll be interesting to see how (or if) the HF part of the final report believes this document may have factored into the crew's (especially the PF's) treatment of the stall warning.

@RetiredF4,

Hi franzl,

Thanks for your comments. I see that you've mentioned all the main points I had been thinking about (and some more), and already explained them to O.C. much better than I was doing!

@HazelNuts39,

Hi,

The trigger was that the system detected a sudden drop in one of the three airspeed values. The "all pitot probe pressures are different" occurred much later at 2:12:xx when the ADR DISAGREE message was generated.

Thanks for the correction. I thought I had previously mentioned the sudden drop of airspeed values in another reply, but it must have been in a draft that I deleted, as I can't see it now. In the above quote I was giving one (obviously amateur and anthropomorphized) example message text, but hadn't meant it to be intended as accurate for any one specific situation (hence why I mentioned "X/Y" instead of specific values). I should have been more careful to avoid misinterpretation.

But decisions are already being made by the avionics about when the air data is unreliable, to then trigger the AP to disconnect etc. I understand that these decisions may not be perfect, but given that they exist already, I'm just suggesting that it is worthwhile exposing the reason for the avionic's decision to the crew - instead of expecting crew to figure out the reason for AP disconnect etc. again, and and perhaps getting that analysis wrong (or at least getting distracted by trying to do it).

You bring up an interesting point - of those 2 effects caused by pitot blockage (airspeed discrepancies and rapid change of airspeed reading), I expect it's more difficult for humans to detect the rapid change of detected airspeed (unless they happen to be looking at the specific instrument at that moment) than to detect discrepancies, since immediately after a change, the (incorrect) speed may stabilise (as seems to have happened on AF447). I want to do some more reading and think about that.

@Old Carthusian,

Hi,

Yes! And I continue to acknowledge this, as I have done over the last few days.

I'm specifically looking deeper into an SOP issue (i.e. the SOP for UAS), but unfortunately (and despite further clarification from me) you keep raising a different part of the overall problem (training) without acknowledging any common ground regarding SOP. Indeed, yet again you've repeated that the outcome of a UAS is what is important, ignoring my point that the UAS recognition and procedure (or lack of it) followed by the crew, is also important.

You've also again asked for a "guarantee" from me, despite me pointing out how unreasonable that is. If I wanted to, I could also ask you to guarantee things about the opinions in some of your replies, which you couldn't do.

Therefore I don't see any value in further conversation if there is no mutual respect here, and I'll politely withdraw from further conversation with you for the moment. Thanks for your comments anyway.

[Added: Before someone reminds me, I know UAS is not an SOP, although the knowledge that the UAS Abnomal procedure exists, counts as being an SOP, IMHO. ]

]

Thanks for your comments. I note you're taking a break from this, as I will also be doing. I've learned new information from some people, including you, over the past few days, thanks.

Originally Posted by PJ2

Not to re-argue the matter, but we know that none of these crew actions were done with AF447 and for this reason (which I have elaborated upon in earlier posts), I concur with O.C., that this is primarily a performance/crew accident. There certainly are training-and-standards issues here as well, and there are airmanship, system knowledge and CRM issues. The HF Report will, (and should) be thick and deeply researched.

However, in BEA IR2 was evidence of inappropriate UAS recognition (and therefore incorrect subsequent procedure) by several crews, not just AF447. It therefore seemed sensible to consider what could be done to help crews via improvements in the man/machine interface, especially since I have seen parallels to such "hidden decisions" in large computer systems, and their adverse effects on quick & efficient troubleshooting by humans.

What I have read over the last few days is that such changes are seen as unnecessary by some people, so I'll withdraw from the thread for the time being, but with my personal opinion (as a GA pilot & engineer, and therefore acknowledging that I don't have the experience to know the consequences) still being that improvements here may be a "net win", and hence worth investigating further, at least.

As you said, the HF part of the report will make interesting reading!

@TTex600

Hi,

As with PJ2, your comments about the state of current training and what pilots are being measured on (and hence what training is being driven to produce), are very interesting - and worrying. Unfortunately I also see this management drive to "train to meet tick-boxes" instead of "train to meet actual requirements" being done outside of aviation.

@safetypee,

Hi,

Another "thanks" from me for those links. Much earlier in these threads, I saw mention of the Dr Bainbridge "Ironies of Automation" paper which I read at the time. I'll see how the papers / discussions in your recent links relate to her conclusions. Some of the descriptions in her paper really resonated with me, as being human behaviour which I've seen and/or experienced.

@RR_NDB,

Hi Mac,

Thanks again for your recent posts & analysis. It will be interesting to see if the BEA recommend manufacturers to re-visit the current situation about UAS warnings / recognition.

Originally Posted by RR_NDB

Airbus SAS (and others) are processing UAS just to the System.  The System is not fed with garbage. The pilots need to "process" any garbage through scan and brain.

The System is not fed with garbage. The pilots need to "process" any garbage through scan and brain.

The System is not fed with garbage. The pilots need to "process" any garbage through scan and brain.

The System is not fed with garbage. The pilots need to "process" any garbage through scan and brain.

@CONF iture,

Hi,

Originally Posted by CONF iture

When you read the memo, it does seem normal to have to deal with a continuous STALL warning without taking any necessary action ...

This leads onto the concern that, if they ignore a stall warning for long enough, then it may no longer still be an approach to stall warning, but may now be a we're stalled warning!

Again, it'll be interesting to see how (or if) the HF part of the final report believes this document may have factored into the crew's (especially the PF's) treatment of the stall warning.

@RetiredF4,

Hi franzl,

Thanks for your comments. I see that you've mentioned all the main points I had been thinking about (and some more), and already explained them to O.C. much better than I was doing!

@HazelNuts39,

Hi,

Originally Posted by HazelNuts39

Originally Posted by Diagnostic

"This is a UAS situation, all my pitot probe pressures are different so I have to disconnect the AP - recommend you fly pitch & power which for this alt is X/Y"

Originally Posted by HazelNuts39

Considering the multitude of possible causes and flight conditions, IMHO the computers cannot reliably identify UAS, but must leave the diagnosis of the problem to intelligent humans.

You bring up an interesting point - of those 2 effects caused by pitot blockage (airspeed discrepancies and rapid change of airspeed reading), I expect it's more difficult for humans to detect the rapid change of detected airspeed (unless they happen to be looking at the specific instrument at that moment) than to detect discrepancies, since immediately after a change, the (incorrect) speed may stabilise (as seems to have happened on AF447). I want to do some more reading and think about that.

@Old Carthusian,

Hi,

Yes! And I continue to acknowledge this, as I have done over the last few days.

I'm specifically looking deeper into an SOP issue (i.e. the SOP for UAS), but unfortunately (and despite further clarification from me) you keep raising a different part of the overall problem (training) without acknowledging any common ground regarding SOP. Indeed, yet again you've repeated that the outcome of a UAS is what is important, ignoring my point that the UAS recognition and procedure (or lack of it) followed by the crew, is also important.

You've also again asked for a "guarantee" from me, despite me pointing out how unreasonable that is. If I wanted to, I could also ask you to guarantee things about the opinions in some of your replies, which you couldn't do.

Therefore I don't see any value in further conversation if there is no mutual respect here, and I'll politely withdraw from further conversation with you for the moment. Thanks for your comments anyway.

[Added: Before someone reminds me, I know UAS is not an SOP, although the knowledge that the UAS Abnomal procedure exists, counts as being an SOP, IMHO.

]

]

Last edited by Diagnostic; 9th Apr 2012 at 01:02. Reason: Spelling & clarification inc SOP