F-35 Cancelled, then what ?

Join Date: Mar 2011

Location: Sussex

Age: 66

Posts: 371

Likes: 0

Received 0 Likes

on

0 Posts

Moore's Law and Software Development

As I see it the recent sensor discussion highlights two elephants in the room.

The processors in the systems may well have been top of the range one day but are far from it now, so to keep everyone happy it could be argued that a change in specifications of the sensors, processors and thus software is required, trouble is this would add a number of years and yet more risk to the development program.

Or has this all been covered by concurrent development, I think not.

The processors in the systems may well have been top of the range one day but are far from it now, so to keep everyone happy it could be argued that a change in specifications of the sensors, processors and thus software is required, trouble is this would add a number of years and yet more risk to the development program.

Or has this all been covered by concurrent development, I think not.

Interesting post, PhilipG.

Resolution is my main concern (maybe interest is a better word) for these sensors. 1 MP is roughly 1.2 million useable pixels. Assuming the sensor is square to cover its 90 x 90 degree field of view that's a resolution of roughly 1,100 x 1,100 pixels or just 12 pixels per degree. Certainly not enough to judge accurately the aspect, or much detail in a target at much more than 3,000 metres (where one pixel covers around 4m x4m). Maybe that's too far, come to think of it. As glad rag said, "FOV anyone?" I can see why the pilot quoted earlier said he needs to eyeball the target to be able to fight it - especially being a 2D image from a single sensor.

As Maus92 has said, increasing that resolution significantly will certainly challenge processing and network speed. I think it was JSFFan that I was trying to discuss this with a couple of years ago having mentioned that the pilot can't see out the back.

I look forward to the unclassified reports on the acuity and usability of the system in due course - there may be some stuff out there, but I'm not sure the system, including the software is mature enough to be meaningful yet.

Resolution is my main concern (maybe interest is a better word) for these sensors. 1 MP is roughly 1.2 million useable pixels. Assuming the sensor is square to cover its 90 x 90 degree field of view that's a resolution of roughly 1,100 x 1,100 pixels or just 12 pixels per degree. Certainly not enough to judge accurately the aspect, or much detail in a target at much more than 3,000 metres (where one pixel covers around 4m x4m). Maybe that's too far, come to think of it. As glad rag said, "FOV anyone?" I can see why the pilot quoted earlier said he needs to eyeball the target to be able to fight it - especially being a 2D image from a single sensor.

As Maus92 has said, increasing that resolution significantly will certainly challenge processing and network speed. I think it was JSFFan that I was trying to discuss this with a couple of years ago having mentioned that the pilot can't see out the back.

I look forward to the unclassified reports on the acuity and usability of the system in due course - there may be some stuff out there, but I'm not sure the system, including the software is mature enough to be meaningful yet.

Last edited by Courtney Mil; 17th Jul 2015 at 11:17.

Join Date: Jun 2009

Location: Earth

Posts: 125

Likes: 0

Received 0 Likes

on

0 Posts

Originally Posted by peter we

12meg is the resolution of 35mm film

The contrast (or lack of) in picture is mainly a property of the lens, making ancient Hasselblad/Zeiss models still ones of the most expensive and sought after, even today.

Originally Posted by Maus92

A super simplified calculation means that the image processor is pushing well in excess of 200MBps of raw data onto the network

Receiving and decoding on the other hand, may not be so real-time.

Originally Posted by Radix

Again, I was under the impression, it'll work vs any vehicle with its engine on.

I guess, LM/NG did a pretty good job 'impressing', at least me.

Last edited by NITRO104; 17th Jul 2015 at 11:22.

Join Date: Aug 2014

Location: New Braunfels, TX

Age: 70

Posts: 1,954

Likes: 0

Received 0 Likes

on

0 Posts

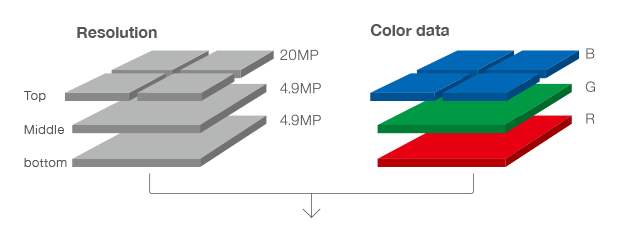

My understanding is that its a 4MP array. And to put this in perspective a 12MP color sensor in a consumer camera also has 4MP resolution.

Only those with a Foveon sensor which are a tiny percentage of consumer cameras. The common Bayer sensor would be a true 12MP.

Only those with a Foveon sensor which are a tiny percentage of consumer cameras. The common Bayer sensor would be a true 12MP.

And DAS is monochrome, so a 4MP sensor provides 4MP of resolution. But unlike a consumer camera, it sees way down into the infrared.

Last edited by KenV; 17th Jul 2015 at 12:47.

Join Date: Apr 2005

Location: in the magical land of beer and chocolates

Age: 52

Posts: 506

Likes: 0

Received 0 Likes

on

0 Posts

Originally Posted by KenV

And DAS is monochrome, so a 4MP sensor provides 4MP of resolution. But unlike a consumer camera, it sees way down into the infrared.

There is a reason that some extra support is needed like the initially unforeseen addition of a separate NVG set.

The DAS upgrade cannot be an easy one, an increase of 1->4 (or to be exact 1.3 to 4.2) will mean adding considerable processing-speed/bandwith increase to keep latecy and jitters under acceptable levels, even 150ms in a fast jet is barely acceptable.

Like i previously said, the EOTS part of the EODAS package is even more complicated and even today, before IOC, already obsolete compared with the latest LITENING series pods and THALES pod that will come on line within just a few years.

The integrated EOTS is even more complicated to upgrade than the DAS.

What I personally also wonder about is what this whole flying through a virtualized image will do for eye fatigue during prolonged use and then there is off course the issue with depth perception.

Wrong again, KenV.

Regarding the Foveon X3 Sensor:

The photodiodes are stacked, not three individual "sensor pixels". Sigma refer to them as "x3" because the 15MP sensor uses 4600x3200x3 photodiodes, but only 15ish million photosites.

Regarding the Foveon X3 Sensor:

Originally Posted by Wikipedia

It uses an array of photosites, each of which consists of three vertically stacked photodiodes, organized in a two-dimensional grid. Each of the three stacked photodiodes responds to different wavelengths of light; that is, each has a different spectral sensitivity curve. This difference is due to the fact that different wavelengths of light penetrate silicon to different depths. The signals from the three photodiodes are then processed, resulting in data that provides the amounts of three additive primary colors, red, green, and blue.

Join Date: Aug 2014

Location: New Braunfels, TX

Age: 70

Posts: 1,954

Likes: 0

Received 0 Likes

on

0 Posts

KenV,

As you should understand, there is a big difference between getting a higher resolution out of a 4MP sensor for a stills camera and a real-time video camera.

As you should understand, there is a big difference between getting a higher resolution out of a 4MP sensor for a stills camera and a real-time video camera.

BTW, check out the actual resolutions of modern DSLRs. Once again your information is out of date.

And BTW, here's a few other chip resolution factoids for comparison (just a reminder: a comparison is a relative term, NOT absolute as you implied in your post):

1. Each of the Hubble Space Telescope's two main cameras have 4 CCDs of .64MP. That's 2.56MP total resolution.

2. The Mars Rovers have 2MP sensors.

3. The LORRI camera on the New Horizons space probe imaging Pluto has a 1024 x 1024 sensor, or just over 1MP resolution.

"Abysmal", huh?

If you left active service in 1985, I suspect some of your military knowledge is similarly dated.

I was a USNA graduate. Which meant that I received an active commission in the unrestricted line. Active commission meant I served in the active forces rather than the reserve forces, and unrestricted line meant I was eligible for command at sea. In 1985 I resigned from active duty and entered the reserves, still unrestricted line. So my active commission became a reserve commission and I served in the Naval Reserve. But being a USNA grad I serve "at the pleasure of the President" for life, meaning he could re-activate me at any time. I was flying F-18s in the reserves out of NAS Lemoore when I got activated for Gulf War 1, otherwise known as Desert Shield/Desert Storm. Afterwards I continued to fly in theater flying Operation Southern Watch, enforcing the no-fly zones over Iraq, and then at NAS Lemoore in Central California. And if you'd given this just a bit of thought rather than jumping to a conclusion, you would have remembered that there was no such thing as an F/A-18C in 1985.

Last edited by KenV; 17th Jul 2015 at 14:47.

Join Date: Aug 2008

Location: Virginia

Posts: 192

Likes: 0

Received 0 Likes

on

0 Posts

Strange, on the KC-46A thread you claimed you were a P-3 driver for many years....

"One more BTW. I also operated the P-3C for several years. When I was on a 12 hour or longer mission over blue water and was loitering one or more engines during the mission and operating at both high and very low altitudes and operating at max range cruise AND max undurance cruise during different parts of the same mission, and expending stores during the mission, I made damned sure I was certain about my fuel computations. So your assumption about my awareness of fuel density on aircraft performance is a fail."

Or did you forget that part?

"One more BTW. I also operated the P-3C for several years. When I was on a 12 hour or longer mission over blue water and was loitering one or more engines during the mission and operating at both high and very low altitudes and operating at max range cruise AND max undurance cruise during different parts of the same mission, and expending stores during the mission, I made damned sure I was certain about my fuel computations. So your assumption about my awareness of fuel density on aircraft performance is a fail."

Or did you forget that part?

Join Date: Dec 2010

Location: Middle America

Age: 84

Posts: 1,167

Likes: 0

Received 0 Likes

on

0 Posts

None of the space imagers you list are trying to grab 90 x 90 degrees FOV in a single frame, it takes hours to complete a high red image with them. But then no one's trying to use the image in real time to operate an aircraft. So I don't see your comparison as relevant.

You call it "Active Duty", fine by me.

You call it "Active Duty", fine by me.

Join Date: Apr 2005

Location: in the magical land of beer and chocolates

Age: 52

Posts: 506

Likes: 0

Received 0 Likes

on

0 Posts

Some seem to doubt Ken's validity when it comes to his claims about his service state here.

I have no reason to doubt his claims , as far as I know and looking at his CV it all seems to be genuine.

Happy to debate him about his perceptions and ideas on modern day fighters like the F35, not going to agree with everything he has to say but I don't think he's just sucking it out of his thumb.

Ken,

Maybe you should go upstairs to your chef and ask him to make the thing we really want and need; a real NG LWF.

I have no reason to doubt his claims , as far as I know and looking at his CV it all seems to be genuine.

Happy to debate him about his perceptions and ideas on modern day fighters like the F35, not going to agree with everything he has to say but I don't think he's just sucking it out of his thumb.

Ken,

Maybe you should go upstairs to your chef and ask him to make the thing we really want and need; a real NG LWF.

Join Date: Mar 2011

Location: Sussex

Age: 66

Posts: 371

Likes: 0

Received 0 Likes

on

0 Posts

Our dear Friend KenV has fallen down the same old trap. A few years ago when the Hubble Space Telescope was new, the resolution of the cameras mounted in it was cutting-edge, things move on, as has consumer and professional photography, to say nothing of computing power. The F35 was specified, on the cutting-edge of technology some years ago, things have moved forward, most of the processing devices on board are probably obsolete. I seem to recall that the USAF bought up the last batch of processors that could be fitted to the F22 some years ago. Even with two year phone contracts, it is amazing the increase in power, resolution, capacity etc that your smart phone has. Thinking of the phone you had when LM won the JSF competition and the one you have today, then recall that the F35 specs have not changed much since then and the system has yet to be shown to work as advertised.

As I posted before if the sensor suite is to be brought up to the standard of other under development or now available sensors, I would imagine that the cost would be astronomic and the delay at least two years.

As I posted before if the sensor suite is to be brought up to the standard of other under development or now available sensors, I would imagine that the cost would be astronomic and the delay at least two years.

Join Date: Aug 2014

Location: New Braunfels, TX

Age: 70

Posts: 1,954

Likes: 0

Received 0 Likes

on

0 Posts

Oh my. Consider for a millisecond why I used the terms "sensor pixel" and "image pixel" in my post. The difference is important and clearly the difference escaped you, leading you to make yet another false assumption and yet another hilarious conclusion.

I said: "A Foveon sensor requires one sensor pixel for each image pixel, because a Foveon sensor can detect all three colors at each pixel." Your assumption that a sensor pixel equates to a photodiode is utterly false. In this useage, a sensor pixel equates to a photosite. Each sensor pixel/photosite in a Foveon sensor is a stack of three photodiodes, with each photodiode responding to a different photo wavelength. Thus each pixel/photosite in a Foveon sensor responds to all three photo wavelengths. Or put another way, a Foveon sensor requires one sensor pixel to generate one image pixel (color).

In a Bayer sensor, each sensor pixel/photosite only responds to one of three photo wavelengths. Thus a Bayer sensor requires three sensor pixels to generate one image pixel (color).

In a monochrome system each sensor pixel/photosite results in an image pixel. Thus a monochrome sensor requires one sensor pixel to generate one image pixel.

I said: "A Foveon sensor requires one sensor pixel for each image pixel, because a Foveon sensor can detect all three colors at each pixel." Your assumption that a sensor pixel equates to a photodiode is utterly false. In this useage, a sensor pixel equates to a photosite. Each sensor pixel/photosite in a Foveon sensor is a stack of three photodiodes, with each photodiode responding to a different photo wavelength. Thus each pixel/photosite in a Foveon sensor responds to all three photo wavelengths. Or put another way, a Foveon sensor requires one sensor pixel to generate one image pixel (color).

In a Bayer sensor, each sensor pixel/photosite only responds to one of three photo wavelengths. Thus a Bayer sensor requires three sensor pixels to generate one image pixel (color).

In a monochrome system each sensor pixel/photosite results in an image pixel. Thus a monochrome sensor requires one sensor pixel to generate one image pixel.

Ecce Homo! Loquitur...

If you were at the Academy at the same time as Brugel, presumably you got your wings at the same time as him. Pretty short active career then, 1981-1985.

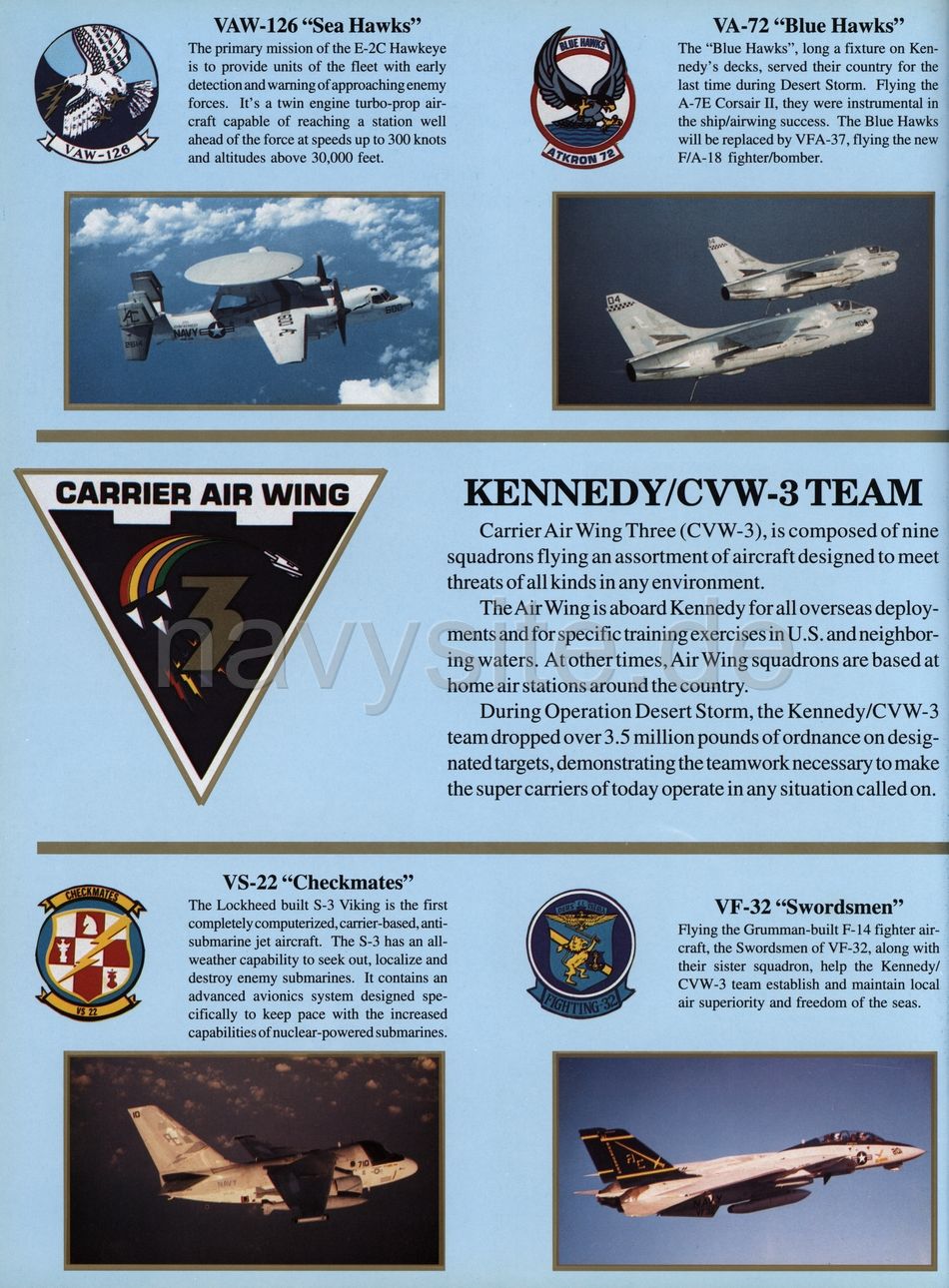

And if you served on the same carriers as he did, presumably you must also have flown F4s, and then later F-14s? after all, the JFK didn't carry F-18s, just F14s, A-7s, S-3s etc. Pretty fast conversion, huh?

Just a few holes mystifying discrepancies, which I am sure you can explain........

KenV:

Drew Brugal:

KenV:

JFK (CV-67) Cruise Book 1990-1991:

And if you served on the same carriers as he did, presumably you must also have flown F4s, and then later F-14s? after all, the JFK didn't carry F-18s, just F14s, A-7s, S-3s etc. Pretty fast conversion, huh?

Just a few holes mystifying discrepancies, which I am sure you can explain........

KenV:

Lets just say that Drew Brugal is a classmate of mine. We were plebes in the same company at USNA and we both served on the same carriers.

Andrés A. (Drew) Brugal......is a member of the 1979 graduating class of the United States Naval Academy and earned his Naval Aviator Wings in August 1981.

His first operational assignment was Fighter Squadron ONE-FIVE-FOUR (VF- 154), stationed at NAS Miramar, California flying the F-4 "Phantom" off USS CORAL SEA (CV-43). While in VF-154, he transitioned to the F-14 "Tomcat" flying off USS CONSTELLATION (CV-64).

In 1984, Captain Brugal received orders to Training Squadron TWENTY-TWO (VT-22) NAS Kingsville, Texas as a flight instructor and Wing Landing Signal Officer, flying the A-4 "Skyhawk". While in VT-22, he was recognized as the 1988 Training Wing TWO Instructor of the Year and the Kingsville Chamber of Commerce Junior Officer of the Year. Returning to the "Tomcat" in October 1989, Captain Brugal reported to Fighter Squadron Three-Two (VF-32) at NAS Oceana, Virginia. While with VF-32, he deployed aboard USS JOHN F. KENNEDY for Operations DESERT SHIELD and DESERT STORM......

His first operational assignment was Fighter Squadron ONE-FIVE-FOUR (VF- 154), stationed at NAS Miramar, California flying the F-4 "Phantom" off USS CORAL SEA (CV-43). While in VF-154, he transitioned to the F-14 "Tomcat" flying off USS CONSTELLATION (CV-64).

In 1984, Captain Brugal received orders to Training Squadron TWENTY-TWO (VT-22) NAS Kingsville, Texas as a flight instructor and Wing Landing Signal Officer, flying the A-4 "Skyhawk". While in VT-22, he was recognized as the 1988 Training Wing TWO Instructor of the Year and the Kingsville Chamber of Commerce Junior Officer of the Year. Returning to the "Tomcat" in October 1989, Captain Brugal reported to Fighter Squadron Three-Two (VF-32) at NAS Oceana, Virginia. While with VF-32, he deployed aboard USS JOHN F. KENNEDY for Operations DESERT SHIELD and DESERT STORM......

I left ACTIVE DUTY (not "active service") in 1985. I don't know how the UK systems works, but here's how it works in USN.

I was a USNA graduate. Which meant that I received an active commission in the unrestricted line. Active commission meant I served in the active forces rather than the reserve forces, and unrestricted line meant I was eligible for command at sea. In 1985 I resigned from active duty and entered the reserves, still unrestricted line..... I was flying F-18s in the reserves out of NAS Lemoore when I got activated for Gulf War 1, otherwise known as Desert Shield/Desert Storm.

I was a USNA graduate. Which meant that I received an active commission in the unrestricted line. Active commission meant I served in the active forces rather than the reserve forces, and unrestricted line meant I was eligible for command at sea. In 1985 I resigned from active duty and entered the reserves, still unrestricted line..... I was flying F-18s in the reserves out of NAS Lemoore when I got activated for Gulf War 1, otherwise known as Desert Shield/Desert Storm.

Join Date: May 2000

Location: UK and where I'm sent!

Posts: 519

Likes: 0

Received 0 Likes

on

0 Posts

Originally Posted by Courtney Mil

Resolution is my main concern (maybe interest is a better word) for these sensors. 1 MP is roughly 1.2 million useable pixels. Assuming the sensor is square to cover its 90 x 90 degree field of view that's a resolution of roughly 1,100 x 1,100 pixels or just 12 pixels per degree. Certainly not enough to judge accurately the aspect, or much detail in a target at much more than 3,000 metres (where one pixel covers around 4m x4m). Maybe that's too far, come to think of it.

Originally Posted by Radix

Still that would make a lowres picture without zoom. You cannot ever spot detail if you use 4MP to cover a large FOV over a distance of several nm. Assuming DAS has zero zoom capability, thus it gets really difficult to track multiple targets a few nm away, because they will only be a few pixels large and particularly since the jet will be moving all the time as well leading to motion blur. This aligns with the statement of the pilot in the interview.

It does not look like the sensor resolution is anywhere near high enough.

I recently saw the Gen III helmet and it is a bit of a monster. It's light for its bulk and the position tracking demo we saw looked pretty solid, although it was in a lab, not a moving aircraft. None of us got to try it, but the guy doing the demo did say that it's a bit slow if you you snap your head from looking out one side of the canopy to the other. It's takes only milliseconds to catch up, but is noticeable. I don't have much technical detail to share and the display was not fed from the real DAS, so it doesn't help much with the resolution question.

If they were to start the architecture from scratch today (knowing what they already do), I expect they could come up with something useful. As it is, I sadly expect that this part of the programme is going to be playing catch up for a while yet.

Ummm, you got that backwards. A 12MP Bayer array requires three sensor pixels for each image pixel, one pixel for red, one pixel for green, and one pixel for blue. A Foveon sensor requires one sensor pixel for each image pixel, because a Foveon sensor can detect all three colors at each pixel. So a camera with a Bayer 12M pixel sensor generates a 4MP image resolution.

I also have a Sigma DP1 14M pixel Foveon sensor camera which outputs images at 2640 x 1760 pixels which gets you 4.69 million. (14 divided by three).

Looks right to me.

Join Date: Aug 2014

Location: New Braunfels, TX

Age: 70

Posts: 1,954

Likes: 0

Received 0 Likes

on

0 Posts

Strange, on the KC-46A thread you claimed you were a P-3 driver for many years....

"One more BTW. I also operated the P-3C for several years. When I was on a 12 hour or longer mission over blue water and was loitering one or more engines during the mission and operating at both high and very low altitudes and operating at max range cruise AND max undurance cruise during different parts of the same mission, and expending stores during the mission, I made damned sure I was certain about my fuel computations. So your assumption about my awareness of fuel density on aircraft performance is a fail."

Or did you forget that part?

"One more BTW. I also operated the P-3C for several years. When I was on a 12 hour or longer mission over blue water and was loitering one or more engines during the mission and operating at both high and very low altitudes and operating at max range cruise AND max undurance cruise during different parts of the same mission, and expending stores during the mission, I made damned sure I was certain about my fuel computations. So your assumption about my awareness of fuel density on aircraft performance is a fail."

Or did you forget that part?

After graduating from USNA I receive my commission and entered flight school in Florida and Texas. Upon graduation from flight school and completing RAG training I flew A-4 Skyhawks out of NAS Lemoore. While thus serving my jet experienced a catastrophic turbine failure at high speed (just under 400 KIAS) at low altitude (about 100 ft AGL) and I ejected. I was on the ragged edge (which side of the edge is still under debate) of the ESCAPAC ejection seat's envelop and while I survived, things did not go entirely well.

USN allowed me to stay in and keep flying, but I was required to transition to anything not ejection seat equipped, which in USN essentially meant a helo or a P-3. Flying a helo in USN meant operating off the back of frigates, not my idea of fun, so selected P-3. I went to Corpus Christi where I received multi-engine training and then completed RAG training (excuse me, by then called FRS training.)

I flew one active duty deployment in P-3s. Then I transitioned to the reserves, continuing to fly P-3s. But when the Soviet Navy went away in the 80s, the P-3 mission went away with it. I was RIFed and joined the civilian line. But with the airlines hiring like crazy (plus an out of control RIF process), USN was losing Tacair pilots by the boatload and after a year or so they offered to hire me back based on my previous Tacair experience. I reminded them of their "no ejection seats" restriction, and BUPERS and NAMI said NAVAIR had this cool new airplane called the Hornet that had a new high tech Martin Baker ejection seat and I would be OK to fly that. I agreed.

I completed FRS training and then completed all my day/night carrier quals, day/night refueling quals, weapons quals, etc, etc as a reservist just in time to get activated for Desert Shield/Desert Storm. I continued to fly Hornets (mostly as a reservist) till I departed USN for good after Gulf War 2. Although technically, since I serve "at the pleasure of the President" for life, I could still get called up, but I doubt Obama will have me. And since my earlier ejection adventure is now catching up with me (flailing injuries have loonnggg term effects, many delayed) I'm sure NAMI will no longer allow me in a cockpit, certainly not as command pilot.